this post was submitted on 15 Jul 2024

647 points (96.4% liked)

Fuck AI

1147 readers

588 users here now

"We did it, Patrick! We made a technological breakthrough!"

A place for all those who loathe AI to discuss things, post articles, and ridicule the AI hype. Proud supporter of working people. And proud booer of SXSW 2024.

founded 6 months ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

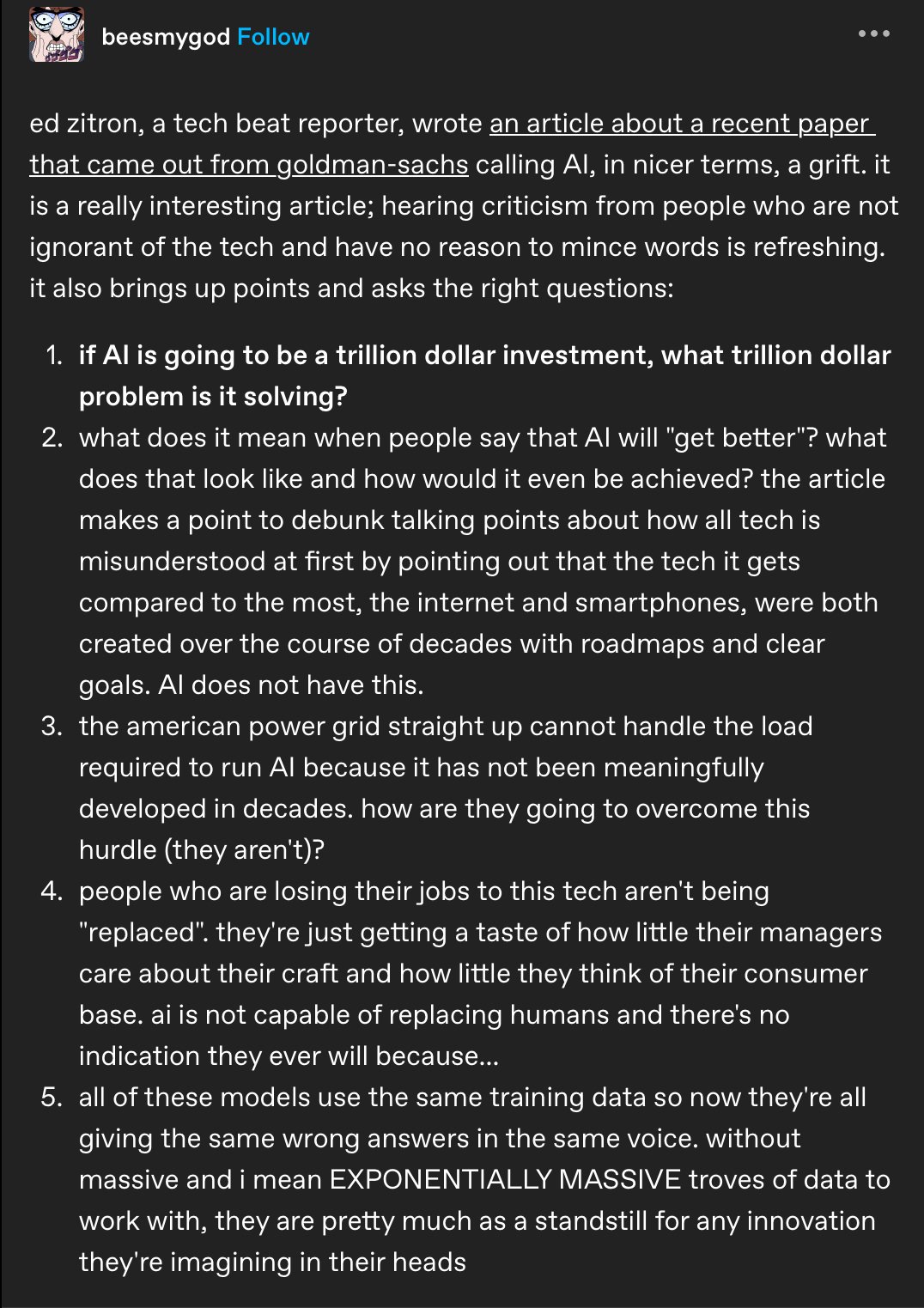

Aren't LLM already pretty much out of (past) training data? Like, they've already chewed through Reddit/Facebook etc and are now caught up to current posts. Of course people will continue talking online and they'll continue to use it to train AI. But if devouring decades of human data, basically everything online, resulted in models that hallucinate, lie to us, and regurgitate troll posts, how can it reach the exponential improvement they promise us!? It already has all the data, has been trained on it, and the average person still sees no value in it...

Your mistake is in thinking AI is giving incorrect responses. What if we simply change our definition of correctness and apply the rubric that whatever AI creates must be superior to human work product? What if we just assume AI is right and work backwards from there?

Then AI is actually perfect and the best thing to feed AI as training data is more AI output. That's the next logical step forward. Eventually, we won't even need humans. We'll just have perfect machines perfectly executing perfection.

What if we invent inbred robots?

That's pretty much what neural networks are

My wife works for a hospital system and they now interact with a chat bot. Somehow it's HIPAA compliant, I dunno. But I said to her, all it's doing is learning the functions of you and your coworkers, and it will eventually figure out how to streamline your position. So theres more to learn, but it's moved into private sectors too.

I mean, happily, chatbots are not really capable of learning like that.

So she's got a while, there.

Hopefully not a while, but that's a story for another day.

They have the chatbots reading emails, in her words, to make them (the emails) more enthusiastic. I don't even ask questions, I'm very insulated from her field and corporate workplaces in general, and admittedly view a lot of what happens there as completely outrageous, but is probably just par for the course.

And that data that it has been trained on is mostly "pre-GPT". They're going to have to spend another untold fortune in tagging and labeling data before training the newer ones because if they train on AI-generated content they will be prone to rot.

Me too.