"tenant of white supremacy"

White Supremacy is the worst landlord.

Big brain tech dude got yet another clueless take over at HackerNews etc? Here's the place to vent. Orange site, VC foolishness, all welcome.

This is not debate club. Unless it’s amusing debate.

For actually-good tech, you want our NotAwfulTech community

"tenant of white supremacy"

White Supremacy is the worst landlord.

Well spotted I’d kinda checked out by that point to be honest

ChatGPT is great because you can use it to show a potential employer how good your writing is for that writing job they'll totally pay you to use ChatGPT to do.

It is and always has been racism that has stopped bad writers from getting writing jobs.

/s

Like, there is definitely racism in the hiring process and how writing is judged, but it comes from the fact that white people and white people alone don't have to code switch in order to be taken seriously. The problem isn't that bad writers are discriminated against it's that nonwhite people have to turn on their "white voice" in order to be recognized as good writers. Giving everyone a white robot that can functionally take their place doesn't actually make nonwhite people any more accepted. It's the same old bullshit about how anonymity means 4chan can't be racist.

I'm actually pretty sympathetic to the value of even the most sneer-worthy technologies as accessibility tools, but that has to come with an acknowledgement of the limitations of those tools and is anathema to the rot economy trying to sell them as a panacea to any problem.

It's just a tool, like cars! My definition of tools is things that are being forced on us even though they're terrible for the environment and make everyone's life worse!

It's a tool, just like cars, in that both are terrible for the environment and risk the survival of the human species as well as countless ecosystems.

But not in the cool way that the people selling them say they endanger the survival of life on this planet, just in the boring climate catastrophe ways that people have been trying to get taken seriously since the fucking 70s.

Tenant was that Christopher Nolan movie with the bad audio. Quality comparison.

No that was Tenet, you're thinking of a tent.

No, a tent is a shelter made out of fabric, you're thinking of Tencent

No, tencent is a Chinese tech company, you're thinking of tenement.

No, that’s a housing subdivided for rent. Your thinking of Tennant’s.

No, Tennants is an auction house based at Leyburn in North Yorkshire, England. You're thinking of tenant.

brb, time to go shout 'fucking nazis tenants' at my local library.

Imagining judging someone for a job about communicating with people on their ability to communicate with people effectively.

New hire firefighter [leaning against a dumpster]: yeah I used the AI that puts out fires to get this job. They would have been able to discriminate against me if I hadn't done that. Glad that in this crazy fucked up trash fire of a world, there's still something out there helping to level the playing field.

Veteran firefighter: that trash behind you is on literally on fire

If everyone can write well now then explain this post.

Surely that's an AI generated pfp

apparently she is a real known person from military twitter

actually i feel a bit bad about it now https://www.abc.net.au/news/2023-01-27/daniella-cult-the-family-joined-the-army-toxic-control/101895164

my heuristic: I can understand a shitty past giving crescent to bad reactions, but the moment you start choosing bad things with current-era things I rapidly start losing grace and patience

(and yeah I know there's a continuum of stuff between A and B, but anyone showing up in a fucking news article of this shape is generally well past accident)

My understanding is that through her experience, she has developed a career around analysing cults (as well as other things) which is great! We need that in the world.

However she has unfortunately missed the areas where her expertise would be really insightful (SV itself basically) and taken this weird tack. Dunning Kruger be like that sometimes

Spoken like someone who thinks they can cheat their way to talent

Ya can't

Not talent, but you can cheat your way to success and power, which is what they care about.

That's an interesting point. It also aligns with how some of our main characters are involved in trying to organize a steroid olympics.

I looked through her recent replies on threads, and while she has deleted the original post, it looks like she is doubling down on this take:

I guess I’ll say this in a different way, the language around that SOME people are using around chat GPT is the same panic language society always uses with new “advancements” or tools. We saw it when GPS became a thing, we see it now with people freaking out about cursive going away, and oh my, they definitely saw it with calculators. At its core it’s a “geez how are we gonna tell people apart anymore, if we can’t test these skills.” That’s not the only argument about it…

there are plenty of things to talk about about AI But this language definitely exists in the conversation. I recognize it easily, because it’s very, very Culty. It’s this very apocalyptic nature of discussion around it instead of the acknowledgment that human beings will keep building tools that will change everything.

every time a new tool makes certain skills that we test for to rank folks obsolete human beings freak out

To which all this I say… wow, she really has decided to just ignore all the discourse about generative AI*, huh? Like sure you can use this analogy but it breaks down pretty quickly, especially when you spend like 5 minutes doing any research on this stuff.

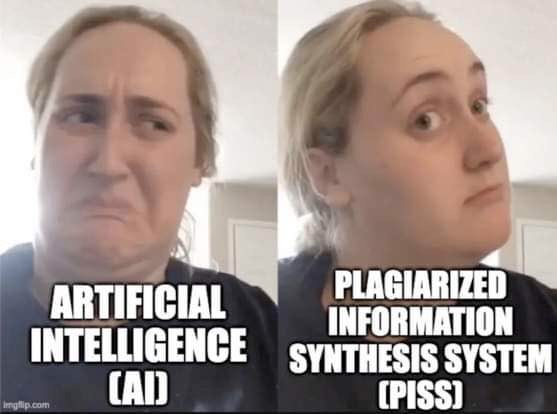

*Would love to start using a new term here because AI oversells the whole concept. I was thinking of tacking something onto procedural generation? Mass PG? LLMPG/LPG? Added benefit of evoking petroleum gas.

That analogy is horseshit because gps and the death of cursive were both need based

Generative ai / chat gpt for writing fiction has no need nor real purpose despite them desperately pinwheeling about jamming it everywhere possible.

The only use I've had for writing cursive in 30 years has been to copy out an anti-cheating pledge on a standardized test, because some fucker thought cursive magically makes a pledge 300% more honest.

Have you considered

I've been playing with "mass averaging synthesis machines", variations on "automated plagiarism", "content theftwashing systems"

still undecided tho

I'm still partial to "spicy autocomplete" as a good analogy for how these systems actually work that people have more direct experience with. Take those Facebook posts that give you the first few words and say "what does autocomplete say your most used words are?" and make answering the question use as much electricity as a small city.

Charles Stross suggested "Blarney Engine"

Okay, show me a system that was only trained on data given with explicit permission and hopefully compensation and I'll happily be fine with it.

But that isn't what these capitalists, tech obsessives etc they have done. They take take take and give nothing back.

They do not understand nor care about consent, that's the crux of the issue.

I couldn't care less if all the training data was consensual.

But even if it there was an LLM that used only ethical sources it would still need massive amounts of energy for training and using so until we're 100% renewable and the whole world gets as much of that energy as they need ...

This is fair. I was more responding to the person in the picture's point that we care more that other people who don't have the skills or perhaps ability to write can now when no, that's not really the problem.

But you do raise a good point.

how to let people know you're not a talented writer but think you should be without telling people you're not a talented writer but you think you should be

Except that the ability to communicate is a very real skill that's important for many jobs, and ChatGPT in this case is the equivalent to an advanced version of spelling+grammar check combined with a (sometimes) expert system.

So yeah, if there's somebody who can actually write a good introduction letter and answer questions on an interview, verses somebody who just manages to get ChatGPT to generate a cover and answer questions quickly: which one is more likely going to be able to communicate well:

Don't get me wrong, it can even the field for some people in some positions. I know somebody who uses it to generate templates for various questions/situations and then puts in the appropriate details, resulting in a well-formatted communication. It's quite useful for people who have professional knowledge of a situation but might have lesser writing ability due to being ESL, etc. However, that is always in a situation where there's time to sanitize the inputs and validate the output, often choosing from and reworking the prompt to get the desired result.

In many cases it's not going to be available past the application/overview process due to privacy concerns and it's still a crap-shoot on providing accurate information. We've already seen cases of lawyers and other professionals also relying on it for professional info that turns out to be completely fabricated.

LLMs are distinctly different from expert systems.

Expert systems are designed to be perfectly correct in specific domains, but not to communicate.

LLMs are designed to generate confident statements with no regard for correctness.

Don't make me tap the sign

This is not debate club

We don't correct people when they are wrong. We do other things.

Yeah. I should have said "illusions of" an expert system or something similar. An LLM can for example produce decent working code to meet a given request, but it can also spit out garbage that doesn't work or has major vulnerabilities. It's a crap shoot

Should have used chatGPT.

holy hell that inner is all kinds of past-even-wrong

is there some kind of idiocy gdq rankweekend event that I missed the announcement for?

Serious question: what does “stack rank” mean?

See https://en.m.wikipedia.org/wiki/Vitality_curve

It's become really popular in large tech companies and it's fucking stupid.

Thank you. I’ve never even heard of the term before, and didn’t know if it was slang, a typo, or what. It wouldn’t have occurred to me to search for it.