this post was submitted on 21 Oct 2024

520 points (98.0% liked)

Facepalm

2647 readers

249 users here now

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

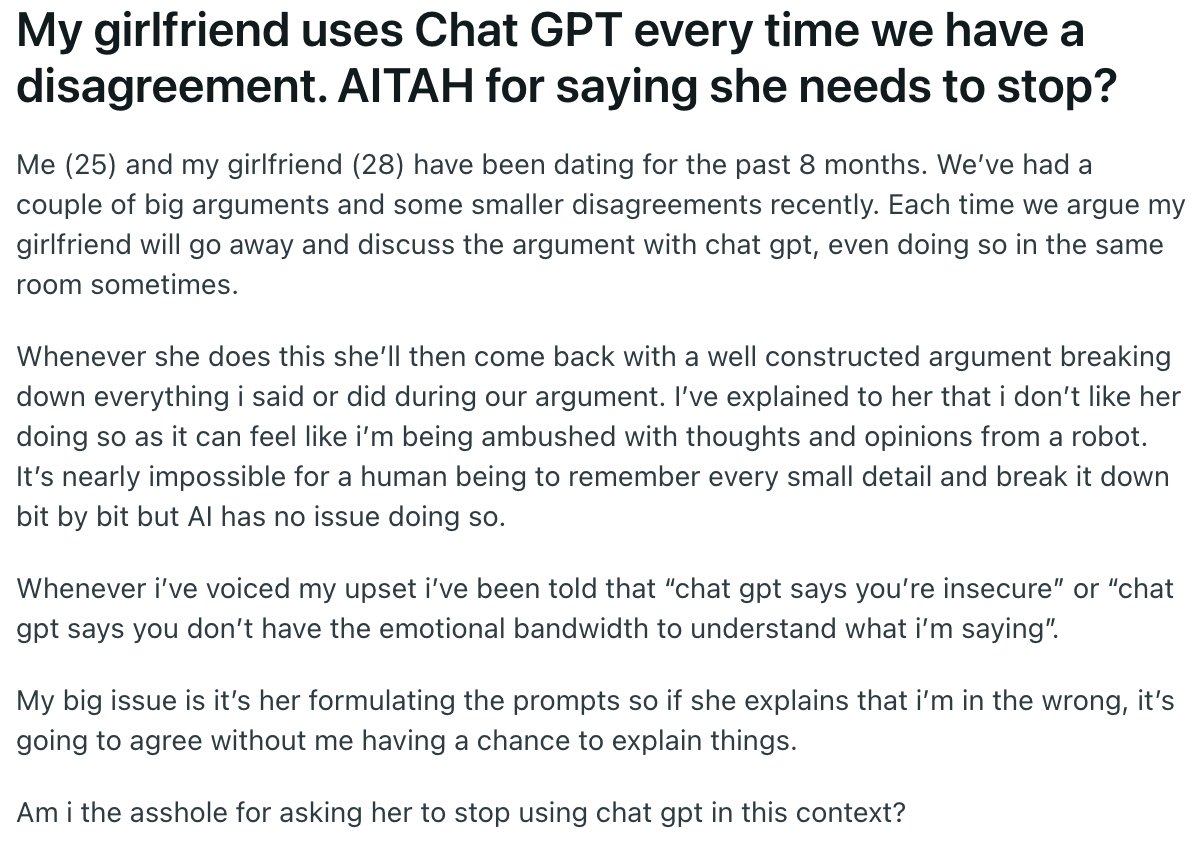

So I did the inevitable thing and asked ChatGPT what he should do... this is what I got:

This isn't bad on it's face. But I've got this lingering dread that we're going to state seeing more nefarious responses at some point in the future.

Like "Your anxiety may be due to low blood sugar. Consider taking a minute to composure yourself, take a deep breath, and have a Snickers. You're not yourself without Snickers."

That's where AI search/chat is really headed. That's why so many companies with ad networks are investing in it. You can't block ads if they're baked into LLM responses.

Ahh, man made horrors well within my comprehension

Ugh

This response was brought to you by BetterHelp and by the Mars Company.

Fuck you beat me by 8 hours

Great minds think alike!

Interested in creating your own sponsored responses? For $80.08 monthly, your product will receive higher bias when it comes to related searches and responses.

Instead of

Imagine the [krzzt] possibilities!

Yeah I was thinking he obviously needs to start responding with chat gpt. Maybe they could just have the two phones use audio mode and have the argument for them instead. Reminds me of that old Star Trek episode where instead of war, belligerent nations just ran a computer simulation of the war and then each side humanely euthanized that many people.

AI: *ding* Our results indicate that you must destroy his Xbox with a baseball bat in a jealous rage.

GF: Do I have to?

AI: You signed the terms and conditions of our service during your Disney+ trial.

Jesus Christ to all the hypotheticals listed here.

Not a judgement on you, friend. You've put forward some really good scenarios here and if I'm reading you right you're kinda getting at how crazy all of this sounds XD

Oh yeah totally—I meant that as an absurd joke haha.

I’m also a little disturbed that people trust chatGPT enough to outsource their relationship communication to it. Every time I’ve tried to run it through it’s paces it seems super impressive and lifelike, but as soon as I try and use it for work subjects I know fairly well, it becomes clear it doesn’t know what’s going on and that it’s basically just making shit up.

I have a freind who's been using it to compose all the apologies they don't actually mean. Lol

That is kinda brilliant

I like it as a starting point to a subject I'm going to research. It seems to have mostly the right terminology and a rough idea of what those mean. This helps me to then make more accurate searches on the subject matter.

Yeah I could imagine that. I’ve also been fairly impressed with it for making something more concise and summarized (I sometimes write too much crap and realize it’s too much).

Yeah, ChatGPT is programmed to be a robotic yes-man.