For those who are interested.

DISCLAIMER:

I DON'T KNOW IF THE GAME HAS DRM THAT WILL PREVENT YOU FROM PLAYING ON LINUX OR NOT. I DON'T OWN THE GAME, THE BENCHMARK TOOL IS FREELY AVAILABLE THOUGH AND THAT'S WHAT I'VE TESTED.

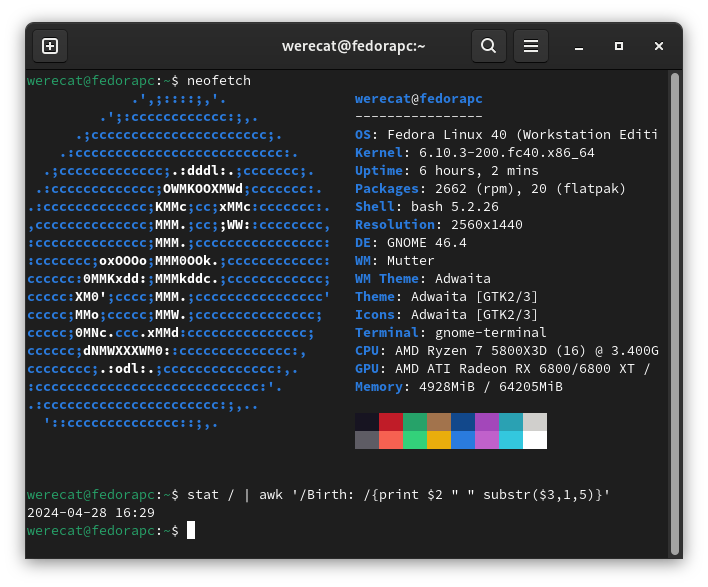

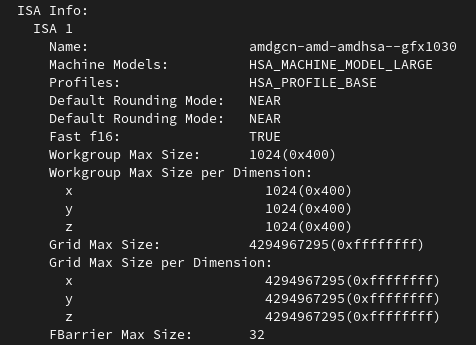

- Fedora40

- Ryzen 7 5800X3D

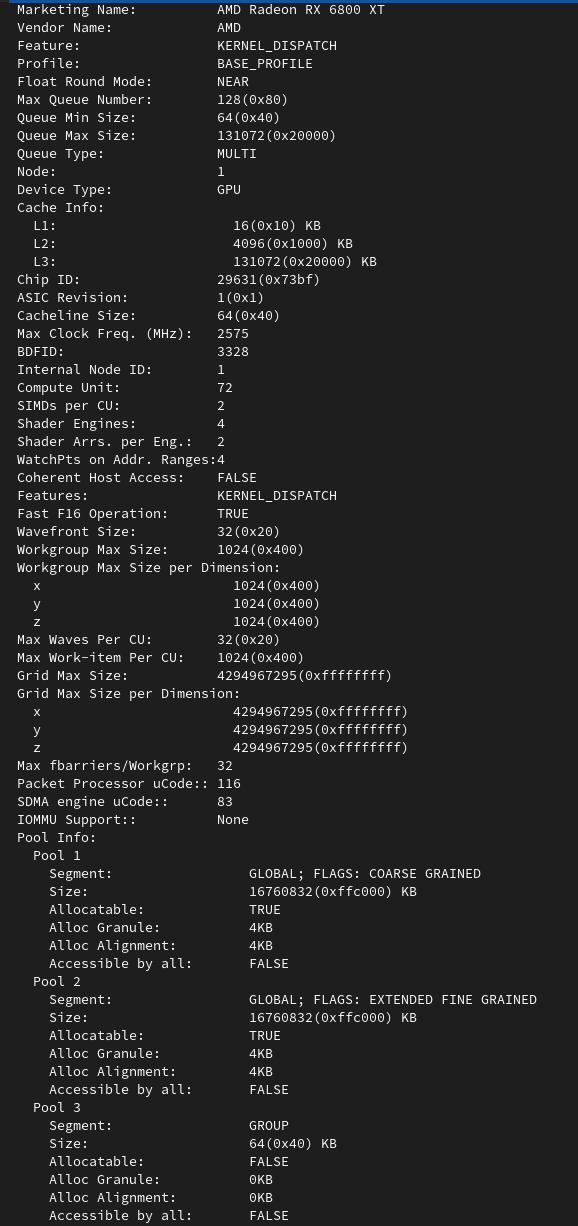

- RX 6800 XT Sapphire Pulse

- 4x16GB DDR4 3600MT/s (Quad Rank with manual tune)

I run some minor OC on the GPU which is 2600MHz core, no VRAM OC because it's broken on Linux, -50mV on the core and power limit set to 312W.

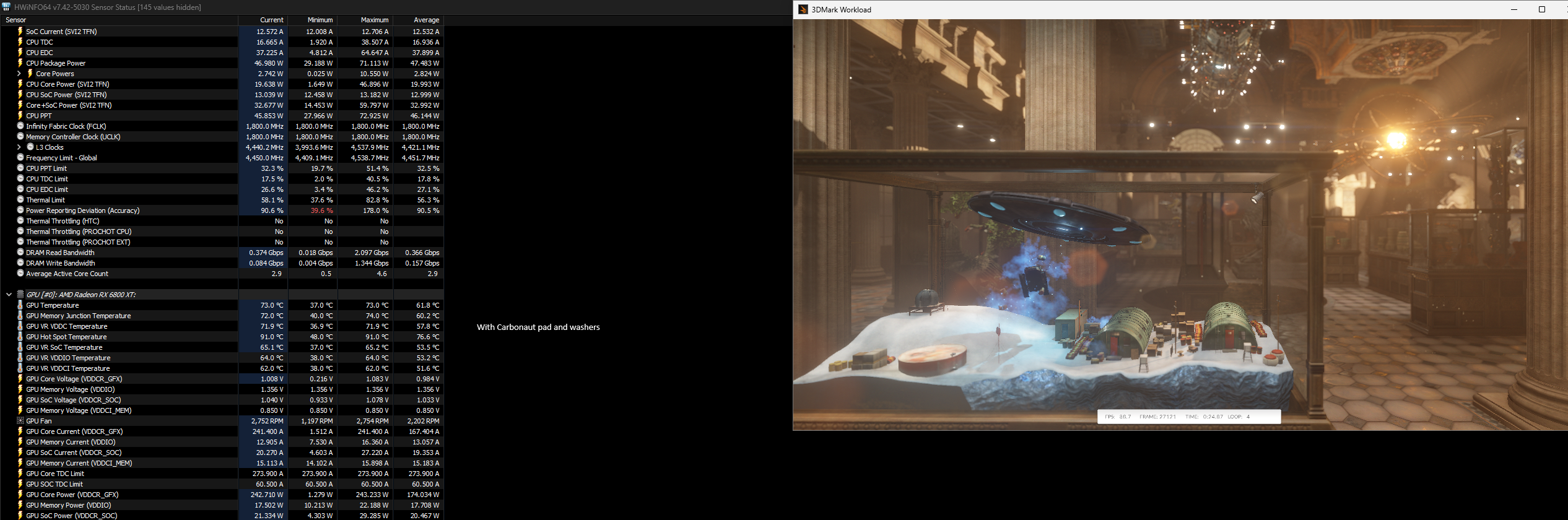

Results: Motion Blur OFF in all

- 1440p High - Native

- AVG = 65 FPS

- Max = 75 FPS

- Min = 55 FPS

- Low 5th = 58 FPS

- 1440p High - FSR 75% scaling

- AVG = 87 FPS

- Max = 106 FPS

- Min = 73 FPS

- Low 5th = 78 FPS

- 1440p High - TSR 75% scaling

- Avg = 85 FPS

- Max = 100 FPS

- Min = 70 FPS

- Low 5th = 76 FPS

I found TSR more pleasing to my eyes even though a bit more blurry but I do find the shimmer of FSR more distracting in motion. In static scenes the FSR definitely pulls ahead in visuals.

Game looks like it's well optimized. You can probably run most settings on Very High if you're targeting just 60FPS with some upscaling. (Assuming if the game performs like the benchmark). The benchmark is also quite GPU heavy and barely put's any load on the CPU, my 5800X3D was using less than 20W for the entirety of the run. It's possible the actual game may be quite a bit more CPU heavy than that.

You can definitely set Textures to Cinematic quality without barely any performance hit if you have card with enough VRAM, the textures do look quite nice on Cinematic.

You dense... You're not very bright, are you?