this post was submitted on 19 Oct 2023

3 points (100.0% liked)

196

16215 readers

2265 users here now

Be sure to follow the rule before you head out.

Rule: You must post before you leave.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

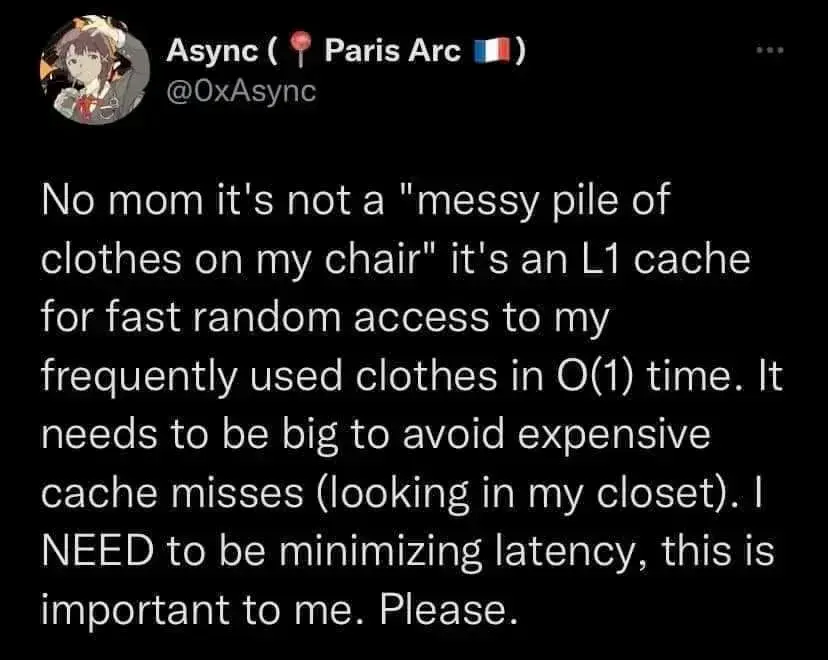

Why would you use a pile out of all data structures, only adding is in ϴ(1), searching is in ϴ(n).

I suggest throwing the clothes on the floor and remembering the spot they landed on. That's ϴ(1) for adding and for searching, far superior to a stack of clothes on a chair.

Side note: fuck big O notation, use big ϴ notation >:(

i think that's the point, it's not a "messy pile", it's actually a completely organized cache; depending on the replacement policy it can appear messy, but you keep the offset and address stored locally for fast access and more hits (i remember that i put some arguably clean socks somewhere in the corner over there)

Ok, I didn't understand roughly half of your comment because I don't actually know how cache works in practice

BUT

a messy pile of clothes represents a stack, doesn't it? And a stack makes a horrible cache because unlike a simple array you don't have fast random access. You'd have to dig through the entire pile of clothes to get to the bottom which takes a lot of time.

A cache is not a stack, it's memory stored in parallel cells. The CPU could theoretically, depending on the implementation, directly find the data it's looking for by going to the address of the cell it remembers that it's in.

Not all L1 caches operate the same, but in almost all cases, it's easy to actually go and get the data no matter where it physically is. If the data is at address 0 or at address 512, they both take the same time to fetch. The problem is if you don't know where the data is, in which case you have to use heuristics to guess where it might be, or in the worst case check absolutely everywhere in the cache only to find it at the very last place... or the data isn't there at all. In which case you'd check L2 cache, or RAM. The whole purpose of a cache is to randomly store data there that the CPU thinks it might need again in the future, so fast access is key. And in the most ideal case, it could theoretically be done in O(1).

ETA: I don't personally work with CPUs so I could be very wrong, but I have taken a few CPU architecture classes.