this post was submitted on 19 Jul 2024

2002 points (99.1% liked)

linuxmemes

21428 readers

741 users here now

Hint: :q!

Sister communities:

Community rules (click to expand)

1. Follow the site-wide rules

- Instance-wide TOS: https://legal.lemmy.world/tos/

- Lemmy code of conduct: https://join-lemmy.org/docs/code_of_conduct.html

2. Be civil

- Understand the difference between a joke and an insult.

- Do not harrass or attack members of the community for any reason.

- Leave remarks of "peasantry" to the PCMR community. If you dislike an OS/service/application, attack the thing you dislike, not the individuals who use it. Some people may not have a choice.

- Bigotry will not be tolerated.

- These rules are somewhat loosened when the subject is a public figure. Still, do not attack their person or incite harrassment.

3. Post Linux-related content

- Including Unix and BSD.

- Non-Linux content is acceptable as long as it makes a reference to Linux. For example, the poorly made mockery of

sudoin Windows. - No porn. Even if you watch it on a Linux machine.

4. No recent reposts

- Everybody uses Arch btw, can't quit Vim, and wants to interject for a moment. You can stop now.

Please report posts and comments that break these rules!

Important: never execute code or follow advice that you don't understand or can't verify, especially here. The word of the day is credibility. This is a meme community -- even the most helpful comments might just be shitposts that can damage your system. Be aware, be smart, don't fork-bomb your computer.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

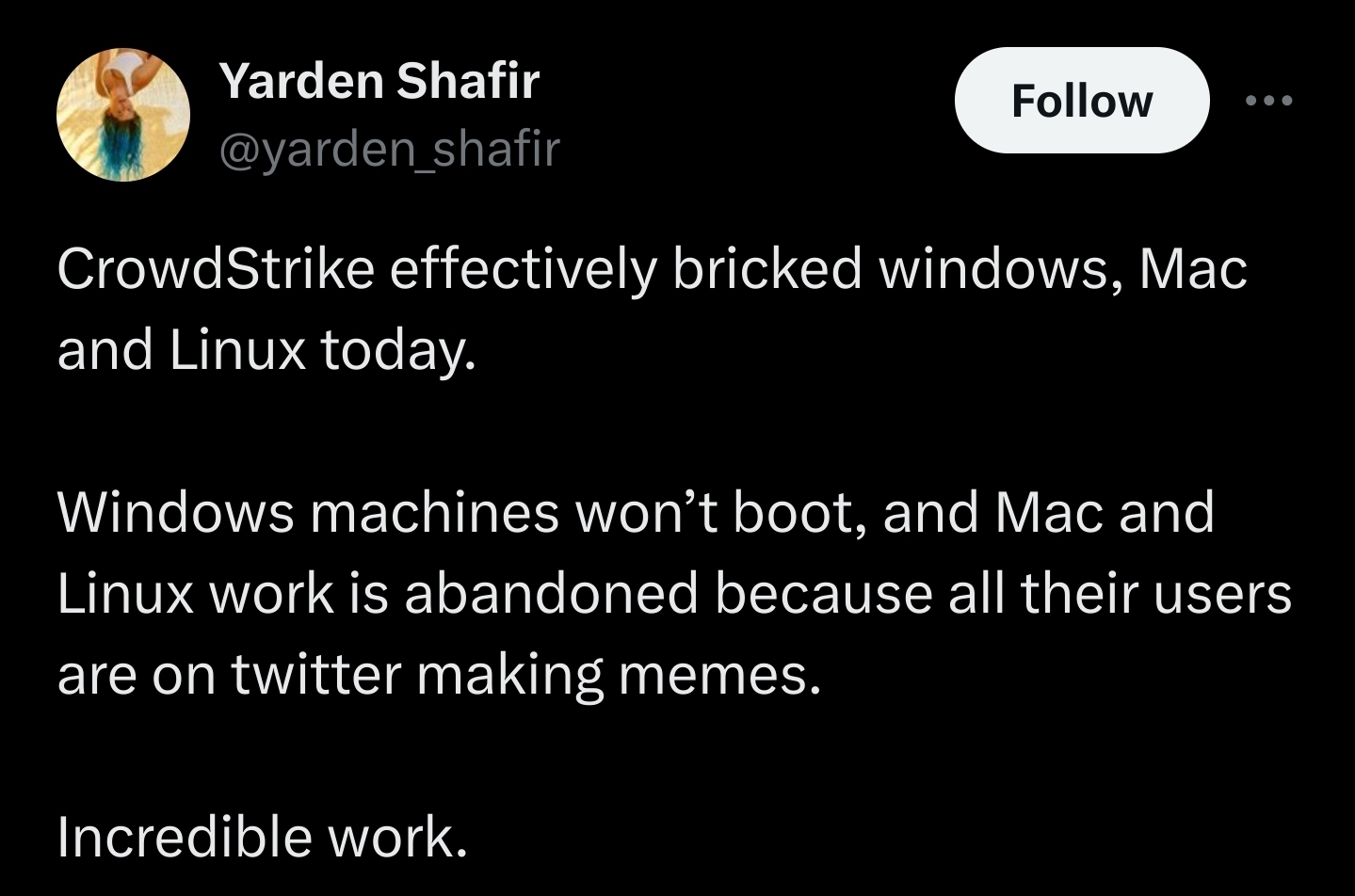

As a career QA, i just do not understand how this got through? Do they not use their own software? Do they not have a UAT program?

Heads will roll for this

From what I've read, it sounds like the update file that was causing the problems was entirely filled with zeros; the patched file was the same size but had data in it.

My entirely speculative theory is that the update file that they intended to deploy was okay (and possibly passed internal testing), but when it was being deployed to customers there was some error which caused the file to be written incorrectly (or somehow a blank dummy file was used). Meaning the original update could have been through testing but wasn't what actually ended up being deployed to customers.

I also assume that it's very difficult for them to conduct UAT given that a core part of their protection comes from being able to fix possible security issues before they are exploited. If they did extensive UAT prior to deploying updates, it would both slow down the speed with which they can fix possible issues (and therefore allow more time for malicious actors to exploit them), but also provide time for malicious parties to update their attacks in response to the upcoming changes, which may become public knowledge when they are released for UAT.

There's also just an issue of scale; they apparently regularly release several updates like this per day, so I'm not sure how UAT testing could even be conducted at that pace. Granted I've only ever personally involved with UAT for applications that had quarterly (major) updates, so there might be ways to get it done several times a day that I'm not aware of.

None of that is to take away from the fact that this was an enormous cock up, and that whatever processes they have in place are clearly not sufficient. I completely agree that whatever they do for testing these updates has failed in a monumental way. My work was relatively unaffected by this, but I imagine there are lots of angry customers who are rightly demanding answers for how exactly this happened, and how they intend to avoid something like this happening again.

If only there were a way to check a file's integrity.

or maybe even automatically like in any well done CD or CI environment. at least their customers now know that they ARE the only test environment CS actually has or uses. ¯_(ツ)_/¯

"if only" - poem ("3 seconds" edition):

if only.

if only there would exist CEOs in the world that could learn from their noob-dumb-brain-dead-faults instead of always ever speaking about their successes which were always-ever really done by others instead.

if only.

if only there were shareholders willing to really look at that wreck that tells all his false success storys and lies, so CEOs could then maybe develop at least a minimum of willingness to learn. maybe a minimum of 3 seconds of learning per decade and per ceo could already help lots of companies a really huge lot.

if only.

if only there was damage compensation in effect so that shareholders would be actually willing to take at least some seconds - maybe 3 seconds of really looking at new CEOs could already help, but its only shareholders, not sure if they would be able to concentrate that long or maybe are already too much degenerated over the generations of beeing parasitic only - to look at the CEOs and the damage they cause before giving them ability to cause that damage over and over again.

if only.

If I were part of CS management, I'd look into recently fired personnel.

I'd be looking to see who made the most shorting the stock.

A corrupt file wouldn't be nulled. I've never ran across a file with all zeroes that wasn't intentional.

maybe just lookup the name of the ceo and where he already did similar ;-)