this post was submitted on 20 Oct 2024

364 points (96.4% liked)

Math Memes

1541 readers

193 users here now

Memes related to mathematics.

Rules:

1: Memes must be related to mathematics in some way.

2: No bigotry of any kind.

founded 1 year ago

MODERATORS

you are viewing a single comment's thread

view the rest of the comments

view the rest of the comments

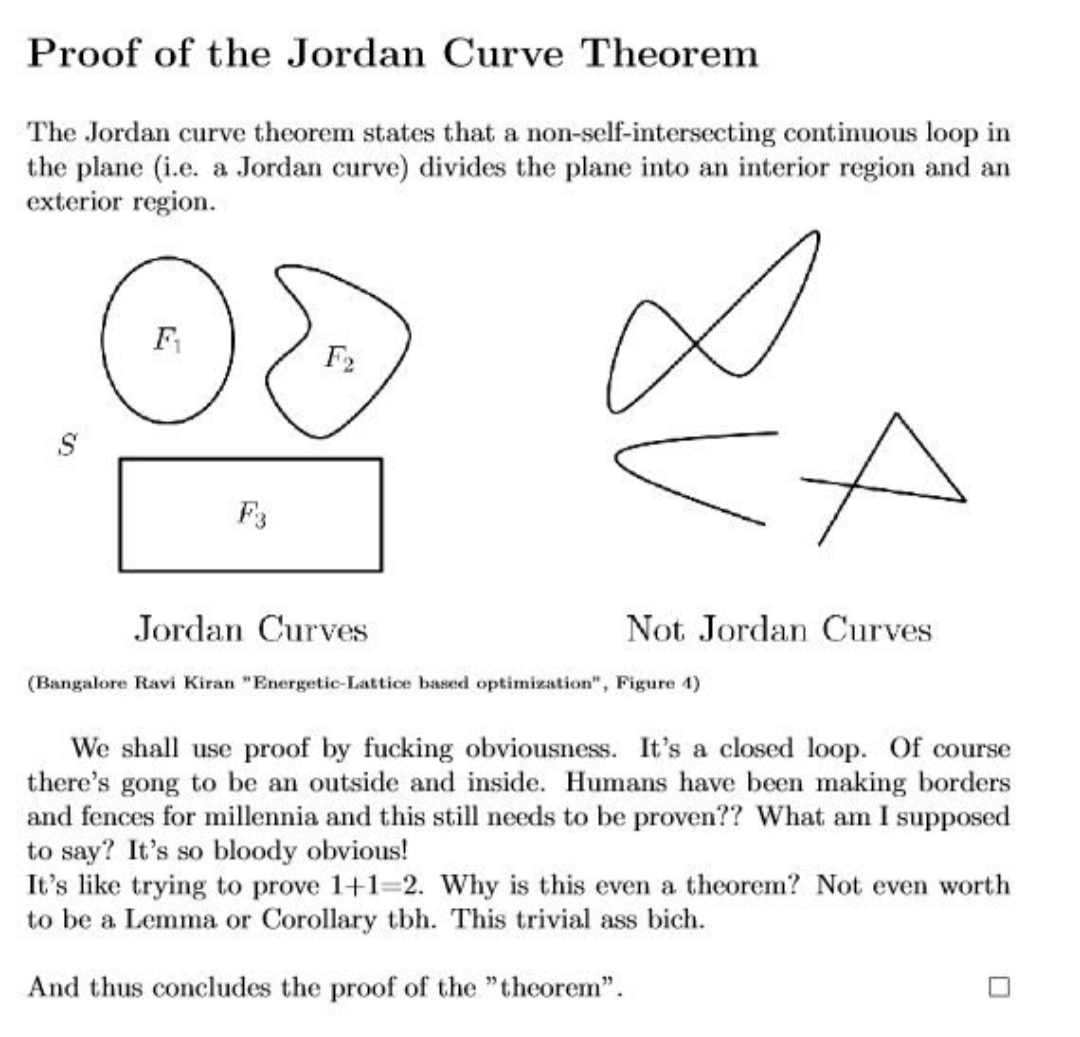

This guy would not be happy to learn about the 1+1=2 proof

One part of the 360 page proof in Principia Mathematica:

It's not a 360 page proof, it just appears that many pages into the book. That's the whole proof.

Weak-ass proof. You could fit this into a margin.

Upvoting because I trust you it's funny, not because I understand.

It's a reference to Fermat's Last Theorem.

Tl;dr is that a legendary mathematician wrote in a margin of a book that he's got a proof of a particular proposition, but that the proof is too long to fit into said margin. That was around the year 1637. A proof was finally found in 1994.

I thought it must be sonething like that, I expected it to be more specific though :)

Principia mathematica should not be used as source book for any actual mathematics because it’s an outdated and flawed attempt at formalising mathematics.

Axiomatic set theory provides a better framework for elementary problems such as proving 1+1=2.

I'm not believing it until I see your definition of arithmetical addition.

Friggin nerds!

A friend of mine took Introduction to Real Analysis in university and told me their first project was “prove the real number system.”

Real analysis when fake analysis enters

I don't know about fake analysis but I imagine it gets quite complex

Isn't "1+1" the definition of 2?

That assumes that 1 and 1 are the same thing. That they’re units which can be added/aggregated. And when they are that they always equal a singular value. And that value is 2.

It’s obvious but the proof isn’t about stating the obvious. It’s about making clear what are concrete rules in the symbolism/language of math I believe.

This is what happens when the mathematicians spend too much time thinking without any practical applications. Madness!

The idea that something not practical is also not important is very sad to me. I think the least practical thing that humans do is by far the most important: trying to figure out what the fuck all this really means. We do it through art, religion, science, and.... you guessed it, pure math. and I should include philosophy, I guess.

I sure wouldn't want to live in a world without those! Except maybe religion.

We all know that math is just a weirdly specific branch of philosophy.

Physics is just a weirdly specific branch of math

Only recently

Just like they did with that stupid calculus that... checks notes... made possible all of the complex electronics used in technology today. Not having any practical applications currently does not mean it never will

I'd love to see the practical applications of someone taking 360 pages to justify that 1+1=2

The practical application isn’t the proof that 1+1=2. That’s just a side-effect. The application was building a framework for proving mathematical statements. At the time the principia were written, Maths wasn’t nearly as grounded in demonstrable facts and reason as it is today. Even though the principia failed (for reasons to be developed some 30 years later), the idea that every proposition should be proven from as few and as simple axioms as possible prevailed.

Now if you’re asking: Why should we prove math? Then the answer is: All of physics.

The answer to the last question is even simpler and broader than that. Math should be proven because all of science should be proven. That is what separates modern science from delusion and self-deception

It depends on what you mean by well defined. At a fundamental level, we need to agree on basic definitions in order to communicate. Principia Mathematica aimed to set a formal logical foundation for all of mathematics, so it needed to be as rigid and unambiguous as possible. The proof that 1+1=2 is just slightly more verbose when using their language.

Using the Peano axioms, which are often used as the basis for arithmetic, you first define a successor function, often denoted as •' and the number 0. The natural numbers (including 0) then are defined by repeated application of the successor function (of course, you also first need to define what equality is):

0 = 0

1 := 0'

2 := 1' = 0''

etc

Addition, denoted by •+• , is then recursively defined via

a + 0 = a

a + b' = (a+b)'

which quickly gives you that 1+1=2. But that requires you to thake these axioms for granted. Mathematicians proved it with fewer assumptions, but the proof got a tad verbose

The "=" symbol defines an equivalence relation. So "1+1=2" is one definition of "2", defining it as equivalent to the addition of 2 identical unit values.

2*1 also defines 2. As does any even quantity divided by half it's value. 2 is also the successor to 1 (and predecessor to 3), if you base your system on counting (or anti-counting).

The youtuber Vihart has a video that whimsically explores the idea that numbers and operations can be looked at in different ways.

I'll always upvote a ViHart video.

Or the pigeonhole principle.

That's a bit of a misnomer, it's a derivation of the entirety of the core arithmetical operations from axioms. They use 1+1=2 as an example to demonstrate it.